With just a few clicks, Stitch starts extracting your Microsoft Azure data, structuring it in a way that's optimized for analysis, and inserting that data into a data warehouse that can be easily accessed and analyzed by Google Data Studio.

#Ms azure data studio install#

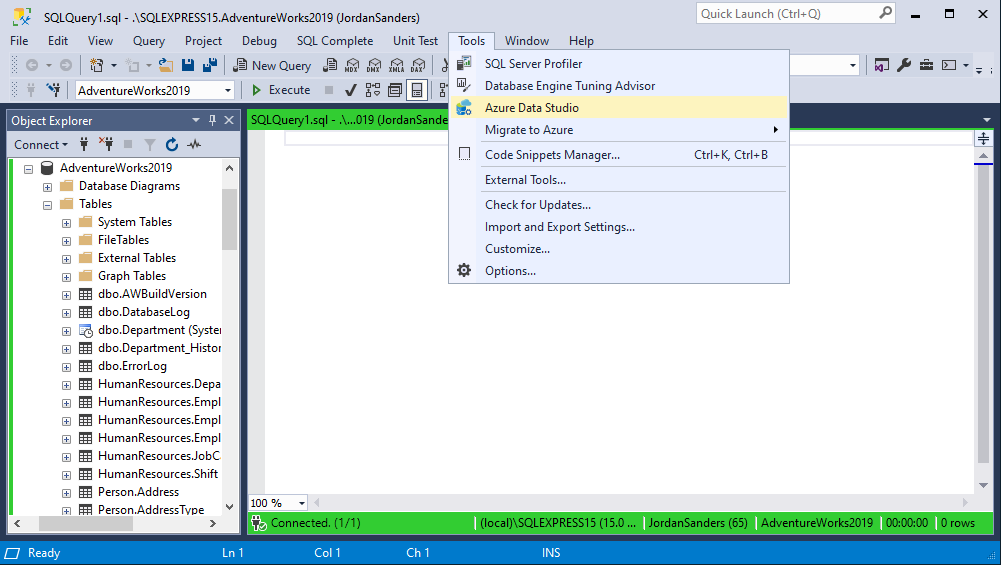

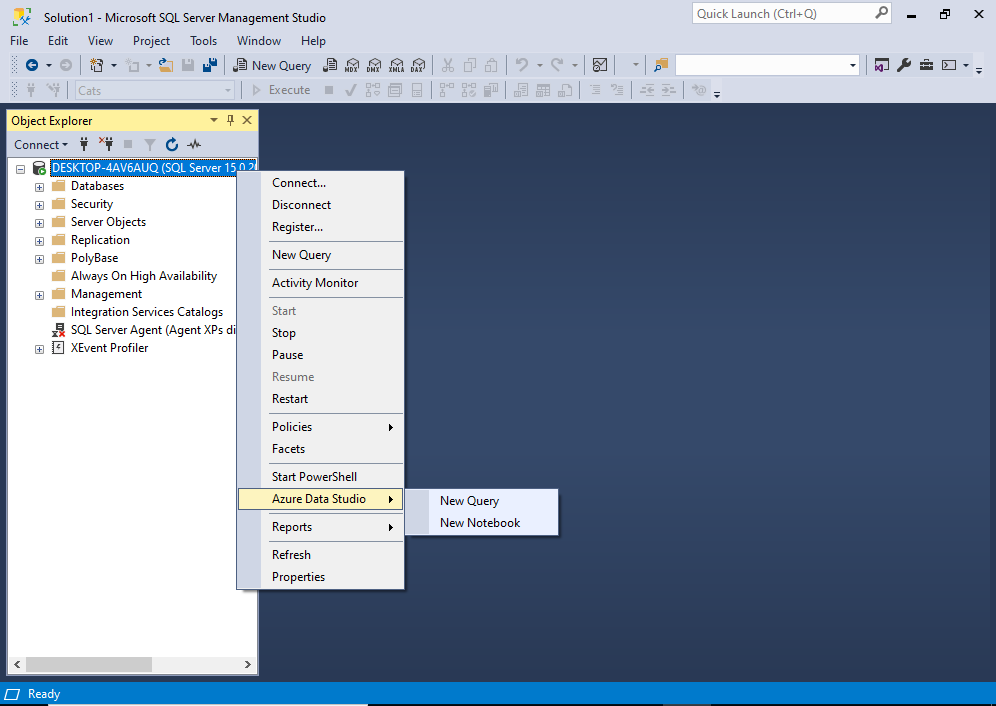

Products like Stitch were built to move data automatically, making it easy to integrate Microsoft Azure with Google Data Studio. Download Azure Data Studio, search for and install the extension Database Migration Assessment for Oracle Generate the output and identify the PoC workload, build a plan with initial waves of simpler migrations, working towards the workloads that require more effort, planning, and orchestration. Discover the feature-rich capabilities of Aqua Data Studio for. You can find instructions for doing these extractions for leading warehouses on our sister sites Microsoft Azure to Redshift, Microsoft Azure to BigQuery, Microsoft Azure to Azure Synapse Analytics, Microsoft Azure to PostgreSQL, Microsoft Azure to Panoply, and Microsoft Azure to Snowflake.Įasier yet, however, is using a solution that does all that work for you. Aqua Data Studio provides a database management tool for Microsoft Azure SQL databases. From Microsoft Azure to your data warehouse: An easier solutionĪs mentioned earlier, the best practice for analyzing Microsoft Azure data in Google Data Studio is to store that data inside a data warehousing platform alongside data from your other databases and third-party sources. If your users want slightly different information, you definitely will have to.

If Azure sends a field with a datatype your code doesn't recognize, you may have to modify the script. When you've built in this functionality, you can set up your script as a cron job or continuous loop to get new data as it appears in Azure.Īnd remember, as with any code, once you write it, you have to maintain it. Auto-incrementing fields such as updated_at or created_at work best for this. Instead, identify key fields that your script can use to bookmark its progression through the data and use to pick up where it left off as it looks for updated data.

That process would be painfully slow and resource-intensive.

But how will you load new or updated data? It's not a good idea to replicate all of your data each time you have updated records. At this point you've successfully moved data into your data warehouse.

0 kommentar(er)

0 kommentar(er)